🍪 TWiC: Maia 200, H200 in China, Micron's $24B Fab, Meta is ↑, MediaTek ASICs ++

This Week in Chips: Key developments and interesting commentary across the semiconductor universe.

Here’s what went down This Week in Chips.

Microsoft/Azure: Maia 200 Accelerator for Inference

Microsoft’s latest Maia 200 accelerator is impressive, and promises a 30% better performance per dollar compared to their current hardware in deployment today. Built on TSMC N3P, it has both SRAM for cache (272 MB) and HBM3E (216 GB) for model weights/KV cache from SK Hynix.

The exclusivity of SK Hynix HBM3E memory is a key signal here. By locking into the Maia 200 design, SK Hynix has essentially secured their position as supplier to the entire Maia fleet of chips for inference. The supply stability for SK Hynix means that they have long term vision into next-gen requirements through 2026 and beyond.

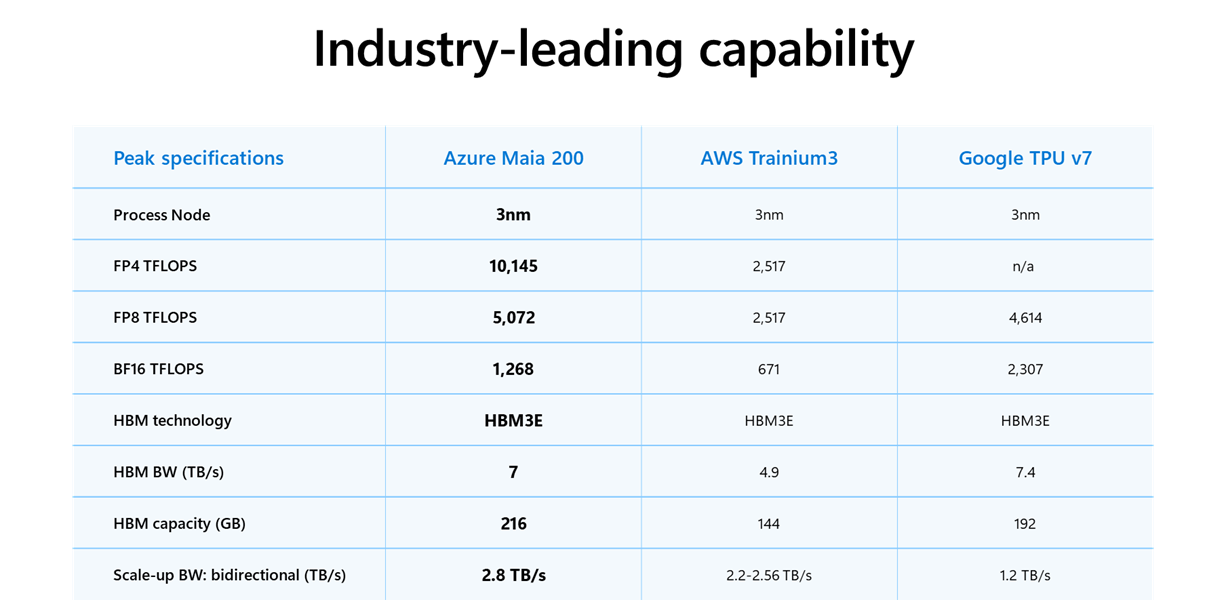

In FP4, Maia 200 provides 2x the performance of AWS Trainium 3, and at FP8 it beats the performance of Google TPU v7. It has an integrated NIC to hook up multiple such chips together, which is something that has existed since Maia 100 - and is not something new. Here is a comparison posted on the Microsoft blog.

In the latest episode of our very own SemiDoped podcast, Austin Lyons speaks with Saurabh Dighe, Corporate VP Azure Systems and Architecture. Lots of great information in here! Check it out.

SCMP: Beijing weighs H200 imports amid uncertainty for China tech giants

China has finally approved the import of Nvidia’s H200 GPUs although the US government cleared it earlier this month on the condition that the volume of shipments does not exceed 50% of what is sold domestically. China has been hesitant to use US imports of GPUs because it is a fine balance of relying on foreign semiconductors vs. building their own silicon via their “Buy Local” mandate. The first wave of chips covers over 400,000 units allocated to ByteDance, Alibaba and Tencent.

There is a catch here to the revenue that Nvidia gets though. For every H200 sold in China, Nvidia owes the US government 25%. This is still a major upside for Nvidia who had to resort to selling “nerfed” H20 GPUs to China which were inferior to domestic alternatives like Huawei’s Ascend 910C, and as a result, China preferred their own domestic alternative. H200 would be a superior choice for frontier model training, while 910C might be relegated to inference applications — which is not a bad use-case for serving inference needs to a country with a population like China.

Micron: Micron Breaks Ground on Advanced Wafer Fabrication Facility in Singapore

Micron is setting up an advanced wafer fab in Singapore and plans to invest approximately $24B over the next 10 years into NAND technology driven by the data storage needs for AI datacenters. While the investment is spread out over a decade, there is concern that other NAND manufacturers like Samsung, SK Hynix, and SanDisk will follow suit and build additional NAND capacity out of fear of falling behind in NAND capacity. If there is a slowdown in AI infrastructure buildout, then these companies will be left holding the bags with excess inventory. This is a pattern that has happened as recently as 2023 in the memory industry, and big commitments to supply are often met with skepticism. They’ve been there, done that, and got burned before.

It all comes down to how much demand we will see for storage, and with the rise of agentic AI and more recently, with everyone realizing the “agentic benefits of ClawdBot/Moltbot despite security concerns,” the period of explosive data growth may still be in its infancy. If demand rises faster than supply, maybe it’s a good thing that NAND manufacturers built up all that capacity infrastructure.

TrendForce: STX 0.00%↑ Seagate Q3 Guidance Tops Estimates, Nearline HDD Capacity Fully Booked Through 2026

While the memory industry has been in a super cycle with some memory stocks like SanDisk surging 1,000% in the last year, storage stocks might just be getting started. Earlier in this newsletter, we discussed the growing need for storage as Generative AI comes online, and as more people start to use it for YouTube videos, social media posts, etc. Nvidia’s announcement of context memory storage at CES 2026 brought renewed focus on why flash storage is very important. However, flash is inherently expensive on a $/TB basis for which hard disk drives are typically the best option for large-scale storage. In Seagate’s recent earnings call, they reported a year-on-year revenue increase by 22% and a 26% year-over-year increase in the total storage capacity shipped (190 EB). Seagate reported that HDDs are fully booked up through 2026, and they are now taking orders for 2027. In short, the demand for large-scale storage remains strong.