Understanding High-Voltage DC Power Architectures for AI Megafactories

Why traditional designs cannot power GigaWatt-class datacenters, and how new power system architectures are evolving to meet the demand.

Welcome to a 🔒 subscriber-only Sunday deep-dive edition 🔒 of my weekly newsletter. Each week, I help readers learn about chip design, stay up-to-date on complex topics, and navigate a career in the chip industry.

If you’re new, start here. As a paid subscriber, you will get in-depth content like this post and access to an ever growing library of resources and archives. We also have student discounts and lower pricing for purchasing power parity (see discounts).

This week, Mark Zuckerberg announced that Meta’s first multi-gigawatt datacenter named Prometheus will come online in 2026. Another supercluster called Hyperion will scale up to 5 gigawatts in the coming years. Just days before, SemiAnalysis published an excellent analysis on Meta’s giant push towards AI dominance.

A gigawatt-class datacenter requires the same level of energy produced by a Westinghouse AP1000 Nuclear Reactor. Think about that for a minute — a nuclear reactor to power just one datacenter! That level of energy generated in an hour can provide for 5 million homes in the US for a whole month. Or, if you want to roll like Meta, you can even build a natural gas plant on premises to keep the chips fed.

Traditional CPU racks in datacenters used to consume 5-15 kiloWatts (kW) per rack, and early AI systems consumed 20-40 kW per rack. By 2027, NVIDIA's Kyber system is projected to demand 600 kW per rack with the Vera Rubin Ultra series of chips, an order of magnitude increase over CPU data centers from five years ago and 3x the power density of today’s leading racks. Future systems will scale to 1, 000 kW (1 MW) per rack. This means running enough electricity to power over 1,000 American homes into a space the size of a filing cabinet by 2027.

In this post, we’ll unpack the growing power demands of AI datacenters and why traditional power system designs in datacenters can’t keep up. We will discuss:

How AI datacenters are physically structured: We will look at how an AI datacenter is laid out using Meta’s legacy H-shaped design as an example.

How electricity flows from the grid to the chip: We will discuss how power is transferred from substations to voltage regulators inside the rack to power compute and networking hardware.

Why today’s power architecture breaks at GigaWatt scale: Scaling limitations as power requirements exceed certain threshold levels.

For paid subscribers:

The pros and cons of different high voltage strategies: From 400V to single-ended and differential 800V and what Google, Meta and NVIDIA are building.

What new architectures are emerging: We will look at new options for how utility power is delivered to datacenter racks, disaggregated power, and sidecar racks.

Read time: 13 mins

A Deeper Look at the Structure of an AI Datacenter

To understand how power systems should be built for high powered datacenters, it helps to understand the physical construction of an AI datacenter.

As an example, take Meta’s classic H-shaped datacenter structure shown in the figure below. This is representative of how Meta used to build datacenters, but have since scrapped these designs due to the time taken to build them and because they are not well suited for future high power AI datacenters. They even demolished one of these they had already built. They are still being used however, and good for our educational purposes here. You can see the same thing at 40°04'05"N 82°45'01"W on Google Earth.

The whole H-shaped unit is just over a million square feet in area (~1,000 ft x 1,000 ft). The middle “bridge” of the H is likely the core services corridor: fiber aggregation, main mechanical/electrical rooms, fire suppression, operations support, and possibly offices or staging areas. The left and right wings are data halls which house compute hardware.

There are a total of 8 data halls, 4 per side. Each data hall is approximately 35,000 sq.ft in area (~270 ft x 130 ft). One can conjecture that a data hall has two data pods, each designed to operate independently, with its own power and cooling resources. Each data pod gets its own diesel generator for backup power, with one spare (N+1 redundancy). Adjoining each data hall is an electrical room that contains backup battery power and switches that connect either the utility power or the diesel generator to power the racks. Each pod also has cooling systems to remove heat generated from the compute racks, whose detailed design approaches are off-topic for this post.

Our main focus here is how power is transferred from the utility service to the compute racks housing CPUs and GPUs, and the electrical systems that make this happen.

An Overview of Power System Design

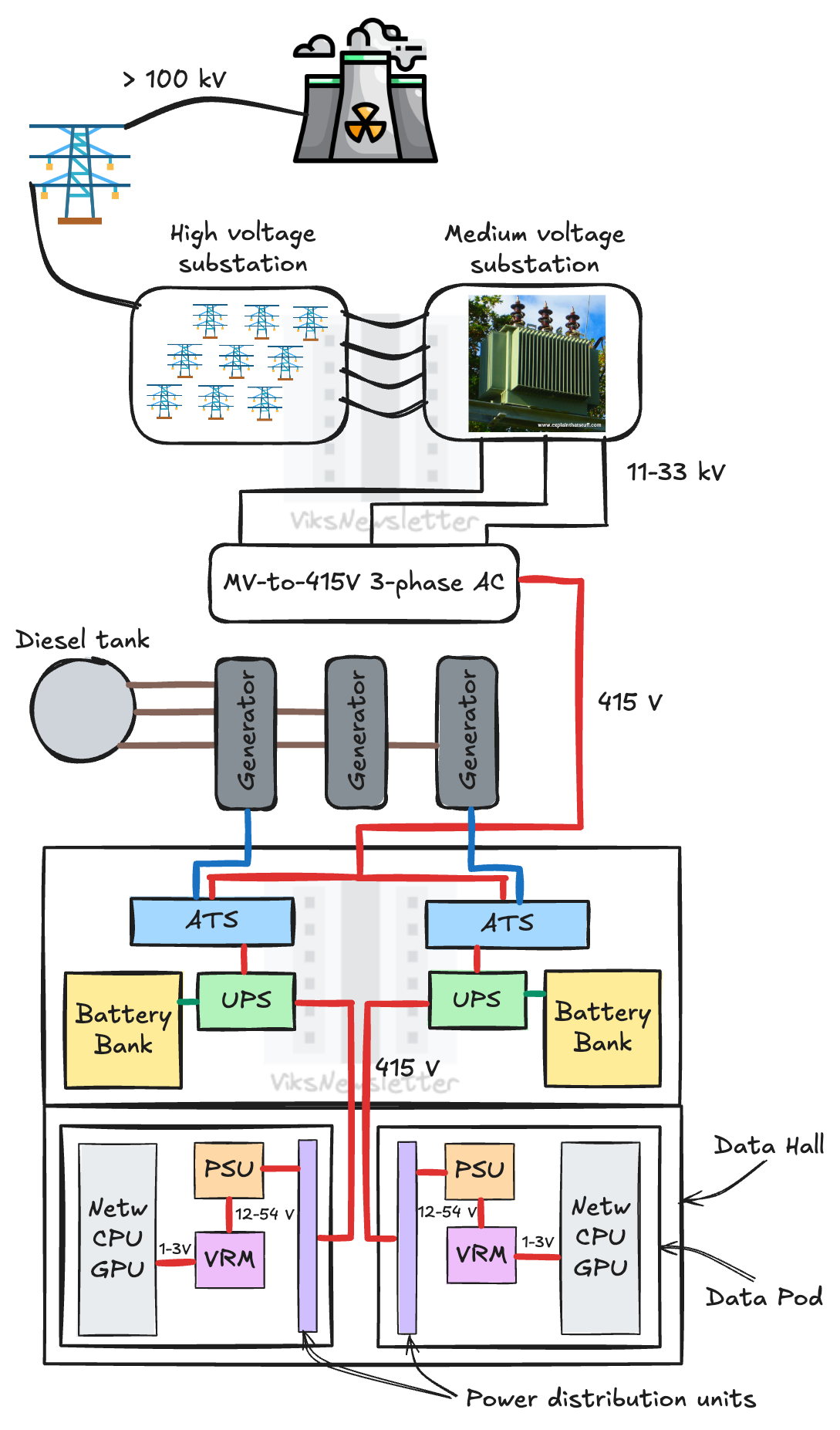

SemiAnalysis has an in-depth article on datacenter electrical systems which I recommend you read if you want the details. I’ll provide a short overview here. The following diagram will be helpful to follow along in the subsequent subsections.

Utility to Datacenter

In an ideal world, we want the power generation to be colocated on the same campus as the datacenter, whether this is via nuclear or natural gas. This is because transmitting power through cables is wasteful, with much of it lost as heat. To minimize the losses, power transmission is often done at high- (>100 kV) or medium-voltage levels (11 — 33 kV). If the utility delivers only high-voltage, then it must be stepped down to medium-voltage levels using an on-site substation at the datacenter. Slightly north of the Meta datacenter shown above, you will see an electrical sub-station that converts high-voltage to medium-voltage using high powered transformers.

Datacenter to Utility Room

The medium-voltage levels are then distributed throughout the datacenter, right up to the electrical room adjoining the data halls. There is another transformer here that steps the 11-33 kV voltage to 415 V 3-phase AC power at every data hall. Right at this point, there is a diesel generator with about 3 MW capacity that will be switched in using an Automatic Transfer Switch, or ATS, located in the utility room to power the data halls if the utility fails. There is a spare diesel generator should one of them fail at the time utility also fails.

Utility Room to Data Pod

Downstream of the ATS and within the electrical room are UPS units that provide power to the racks. The purpose of the UPS system is to provide backup power till the generators start up in case of power failure. These diesel generators can take up to a minute to start up, and sometimes require multiple startup attempts. The UPS has about 10 minutes of battery backup just till the generators kick in. There is a generator and UPS system for every pod in a data hall.

The UPS power is routed into the data pods and to the rack units using Power Distribution Units (PDUs), which are either mounted on the rack itself, or placed separate from the rack. There are glorified power strips that distribute AC power to all elements in the rack. The 415 V AC supply is then converted to 12V (or more recently 48V or 54V) using Power Supply Units (PSUs). This voltage is then distributed through copper busbars to the various components that need power in the rack.

An implementation detail worth mentioning here is that the PSU can be implemented on a per-compute-tray basis, or at the rack-level. A PDU is not necessary when the AC-DC conversion occurs at the rack-level. The DC power can be distributed using busbars, and depending on the power levels involved, might need busbar cooling systems too.

Finally, the 12-54V DC power is converted to the voltages the chip actually needs (1-3V) using DC-DC converters in Voltage Regulator Modules (VRMs).

Lots more intricacies

This is just one way to implement a datacenter and power system. Every major hyperscaler uses their own secret, undisclosed method to build their infrastructure. There is a lot more detail that this simple description misses. For example, the rack itself can have a UPS built-in called Battery Backup Units (BBUs) instead of a central UPS; this is more common actually.

Redundancies are setup at every level, from substations, PDUs, to UPSes, to ensure that the rack has power at all times. Remote Power Panels (RPPs) are used to monitor and deliver power to the racks. There are capacitor banks to smooth out current draws to avoid overloading the infrastructure with spikes during training runs. Then there are the cooling system designs, which are incredibly complex. We won’t get into everything here.

Why This Architecture Fails for GW-Class Datacenters

Instead, let us examine the many reasons why this architecture fails when AI datacenter power needs reach GigaWatt levels.

1. Conversion Inefficiency

A key metric in any datacenter buildout is Power Usage Effectiveness (PUE), which is defined as the ratio of total facility energy to the energy usage of IT equipment in racks.

Ideally, this number should be 1 which means that all the facility energy is used in running compute hardware. However, voltage conversions, cooling, battery backups, all consume power and therefore PUE values are often between 1.05 and 1.5. For example, Google reported that their average 2025 PUE across their fleet of datacenters is 1.09 over a 12 month period, Amazon reported a global PUE of 1.15 in 2024, and Meta reported an average PUE of 1.09 for 2023.

As power levels scale up, the energy overhead of AC-DC and DC-DC conversion becomes a bottleneck. The higher currents flowing in the converters and distribution systems lead to more IR-losses, dropping efficiency, and increasing the need for more powerful cooling systems. All these factors lead to reduced PUE, and at GW-scale, a PUE of 1.1 means that 100 MW (an amount that can support an entire datacenter today) is being wasted as overhead.

2. Weight of Copper Busbars

As we briefly discussed earlier, copper busbars are solid chunks of metal that carry power from the PSUs to compute trays. In traditional datacenters, they usually run on 12V DC rails which is sufficient for racks that consume 5-10 kW. As the power usage per rack approaches 60 kW or more, a DC rail voltage of 48 V (in Meta’s Open Compute Project (OCP) Racks) or 54 V (in NVIDIA racks) is used so that currents through the copper busbar can be lowered. This avoids having to increase the amount of copper to reduce resistance.

Explanation: Power=Current x Voltage, which means going to higher voltage reduces current for the same power; the power lost through heat is current-squared times resistance; for high current, the heat loss is lowered by lowering the resistance by making conductors thicker. But, lowering current is actually the better option.

As power usage from a single rack scales to 500 kW — 1 MW, using 54 V rails is simply not possible. In NVIDIA’s blog post, they estimate that this will require 200 kg of copper busbar for a single MW-rack. A GW-class datacenter would then consume half-a-million pounds of copper. The only alternative is go to higher voltages, as we will see later.

3. Number of Power Modules in a Rack

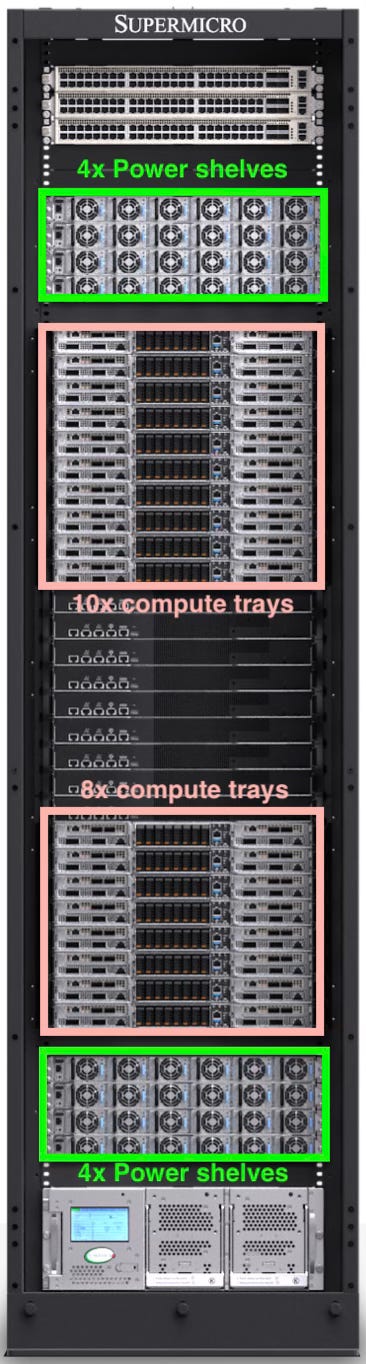

The figure below shows a 48-unit (48U) tall NVL72 rack commonly used with Grace-Blackwell GB200-based Bianca boards which comprise of 1 Grace CPU and 2 Blackwell-200 GPUs. It has 18 compute trays, each containing two GB200s. This means that there are 18 x 2 Bianca boards x 2 B200 GPUs = 72 GPUs in the rack, and hence the name NVL’72’ (NVL=NVLink, the network fabric used to operate all 72 GPUs as if they were one giant GPU.)

There are 8 power shelves in the rack. Each shelf has six 5.5 kW PSUs, or a total of 6 x 5.5 kW = 33 kW. For eight such shelves, the total power capacity is 8 x 33 kW = 264 kW, half of which is allocated for the compute trays, and the other half for NVlink networking load.

If the same rack were to support 1 MW of power, we would need four-times the number of power shelves, or 32 power shelves. This leaves little room for the compute, networking, management and cooling functions in the rack since there are only 48 slots in an NVL72.

The Nvidia Kyber system demonstrated at GTC 2025 was an NVL576 architecture — 576 B200 GPUs in a single rack. Here is how it is broken up: 36 compute trays, 8 GB200 super chips per tray, 2 B200 GPUs per GB200 super chip = 36 x 8 x 2 = 576 GPUs. The PSUs and cooling for such a system was implemented in a separate ‘side car’ rack that was powered by 800V DC. There is simply too much compute in a single rack, and future systems may well delegate a separate rack to non-compute functions.

As part of the Open Compute Project (OCP), Google pioneered the move from 12V to 48V DC in the early 2010s which helped scale rack level power from 10 kW to 100 kW. As AI compute power needs per rack reach 1 MW, there is a need to go to even higher DC rack voltages. We’ll dive into the details of what is coming down the pipeline in high power datacenters after the paywall — specifically:

The pros and cons of different high voltage options

Four power architectures in the datacenter

Disaggregation of power and denser compute