How AI Demand Is Driving a Multi-Year Memory Supercycle

AI's insatiable hunger for HBM, DRAM, and most recently NAND is reshaping the memory market.

Today’s post is a discussion on a recent trend in semiconductor memory. If you’re new, start here! On Sundays, I write deep-dive posts on critical semiconductor technology for the AI-age in an accessible manner for paid subscribers.

Deep-dives related to this post:

New video posted on the YouTube channel:

In my recent guest post on the AI Supremacy newsletter (I will crosspost the article on this newsletter in the future), I explained why whoever gains control of the HBM memory supply dominates the AI race. This is highly relevant to where we are in memory today. Check it out below (partially paywalled).

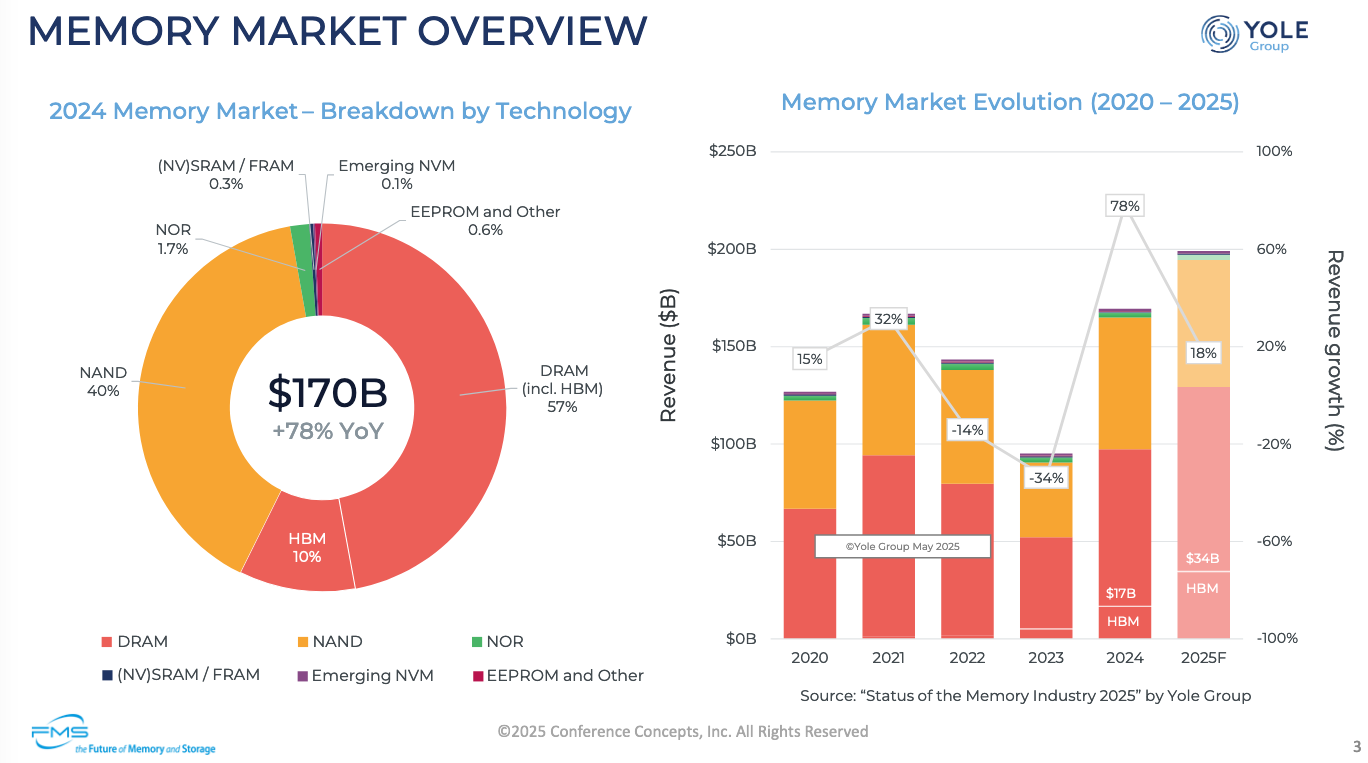

AI’s insatiable demand for high performance memory along with other factors is creating demand across various parts of the AI infrastructure buildout. After 2023’s brutal dip in memory revenues primarily fueled by excess inventory, memory companies such as Micron, SK Hynix and Samsung are now in the driver’s seat and have increased DRAM prices by approximately 20%. NAND and SSD prices are higher too, by about 10%. With the release of OpenAI’s Sora-2 and Meta’s Vibes, the AI-generated video platform, the demand for NAND-based storage is set to skyrocket.

This has led to many people asking if we are now at the helm of a new “memory supercycle” where memory suppliers will see substantial revenue growth in the next 3-5 years. Let’s discuss various aspects of the recent memory market.

The DDR4 Backflip

Without question, the rise of HBM has given new life to an otherwise commodity business that is memory. Since it is built from stacked DRAM chips (read the HBM deep-dive if you need a detailed explanation of how HBM is built), there is more incentive for memory makers to build DRAM for HBM rather than standalone DRAM because they can command a higher price.

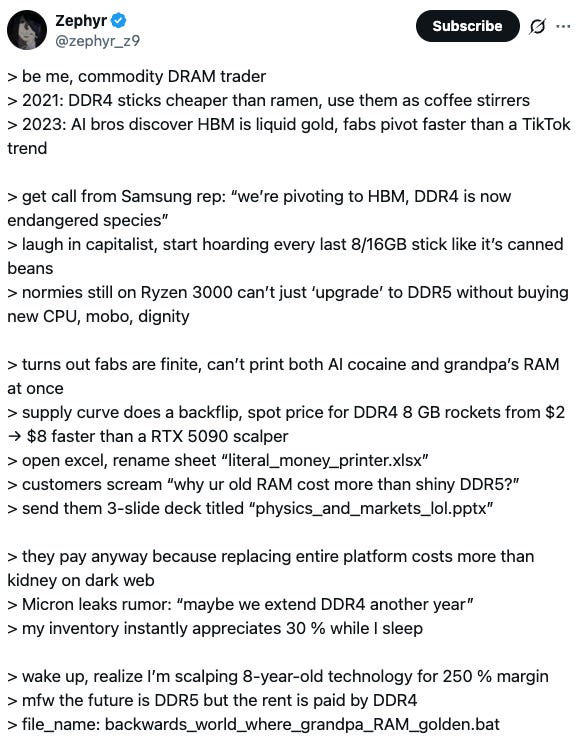

Memory makers declared that DDR4 is end-of-life so that they could make room for production of DRAM for HBM instead. This led to DDR4 prices spiking over four-fold because OEMs who still use DDR4 in existing hardware rushed to buy as much supply as possible before they became unavailable. Zephyr explains this best in his greentext style X post.

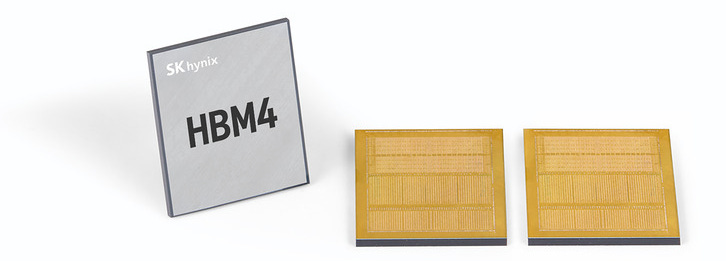

Battle for HBM4 Dominance

On the other hand, Samsung who were struggling with yield issues on HBM3E appear to have finally passed qualification for use in NVIDIA systems. Since they were late on HBM3E, they likely missed NVIDIA orders in 2026 because SK Hynix and Micron were there first. AMD however announced a few months ago that they are using Samsung’s HBM3E in the MI350 accelerator platform. But just as Samsung cleared this HBM3E hurdle, SK Hynix announces readiness of HBM4 for mass production.

HBM is already a very expensive type of memory; HBM4 will only be more so and establishing dominance in the market is a key priority for a memory maker. As we discussed in an earlier deep-dive, SK Hynix’s use of MRMUF for underfill in the DRAM stack provides distinct advantages over its competitors. Plus, they nailed the performance. While the JEDEC spec only requires a data rate of 8 Gbps/pin, SK Hynix demonstrated production-ready speeds of 10 Gbps/pin because their base die is likely built on a true logic node such as TSMC’s 5nm.

This has thrown a wrench in Micron’s bid for HBM4 dominance.

Micron has reportedly been sampling HBM4 to customers with a 9 Gbps/pin data rate, but the bar is being raised in the industry with buyers like NVIDIA demanding 11 Gbps/pin data rates. Seemingly, Micron has a problem now although they claim in their recent earnings call that their HBM provides stellar performance.

They use an in-house memory node, the 1-beta which is a 10nm class node, in the base die for HBM, instead of a true logic node like 5nm. Up until even HBM3E, it is common practice to use memory nodes to design the base die to handle all the interconnect PHY.

While cheaper, memory nodes do not have the fastest transistors and as a consequence pushing to higher pin data rates is harder. The folly of this approach is seemingly remedied in HBM4E because Micron has announced that they are working with TSMC for their base die. Also, read Irrational Analysis’ discussion of Micron’s earnings call.

Is a 5nm logic node required in the base die just to push past the 10-11 Gbps mark, while the 1-beta memory node reportedly delivers 9 Gbps already? Something does not add up here. Going to a transistor node like 5nm that is built for speed should give much more performance benefit. If you’re an experienced PHY designer, reply and let me know. There are other benefits to going to a true logic node for base die which we won’t get into here.

High Capacity Storage

The rise of AI video slop is going to cause an explosive growth in the need for high capacity storage devices - primarily NAND flash. Sandisk (SNDK 0.00%↑) has already seen its stock price skyrocket this year (250% up YTD) and this is even before the demand for high capacity storage has even taken off.

We will discuss the need for data storage and a few other factors driving demand after the paywall.

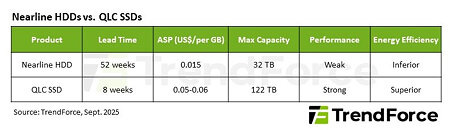

A lot of data such as backups, archives and large files such as AI-generated video is stored in what are called nearline hard disk drives (HDDs). Nearline is something between offline and fully online; “warm” data that is available with reasonable latency when necessary.

Ever since the memory market slowdown in 2023, HDD manufacturers have been cautious to ramp up production. With the sudden surge of data in AI applications, HDDs are now facing a supply chain shortage with lead times up to one full year, with price hikes burned in (pardon the pun).

This has opened up an unique opportunity for embedded solid state drives (eSSDs) which provide some unique advantages in nearline applications. They are denser, have a smaller footprint, generate less heat, consume ~30% lower power, and generally have better endurance. They are still several times costlier per GB compared to HDDs but with production shortages, SSD upgrades are a better long-term bet given their faster access times.

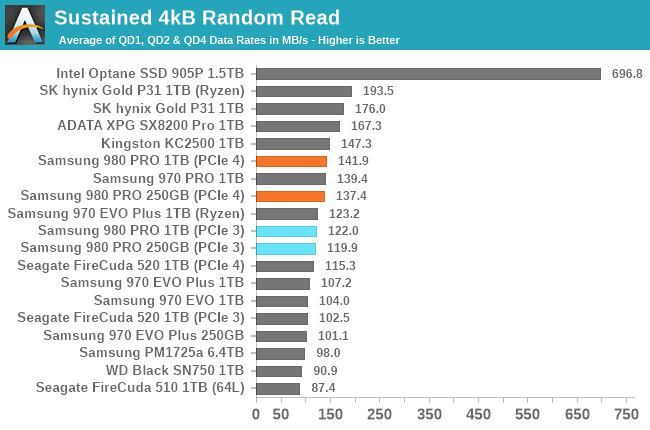

Short aside: It is tragic that Intel wound down their Optane division a mere six months before the launch of ChatGPT. Optane was built on phase change material (PCM) technology (see earlier post about PCM RF switches) and unlike NAND memory was very well suited to small, random read/write operations like system RAM - a familiar workload in AI inference - but with much higher capacity. In addition, it was blazingly fast compared to even the best SSDs in the market today with incredible write endurance. This topic really deserves its own post.

For AI applications in particular, quad-level cell (QLC) NAND flash appears particularly attractive due to its lower cost and high storage density at the cost of durability and write lifespan compared to its triple level equivalent (TLC). This makes QLC-based NAND flash uniquely positioned to benefit from the upcoming demand surge in CSPs and AI hyperscalers.

For example, SK Hynix’s state-of-the-art 321-layer 2Tb QLC NAND flash has already entered mass production and is expected to release in the first half of 2026. Sandisk announced their UltraQLC based SSDs recently which is 256 TB (yes, TeraBytes!) of data in a single drive; perfect for infinite AI video slop. In comparison, the largest HDD available for CSPs is the Seagate Exos M which provides a “mere” 36 TB.

There is always concern about endurance when it comes to QLC based SSDs. But at that level of storage density, power savings, and considering that HDDs are not reliable to begin with due to mechanically moving parts, its safe to say that SSDs in CSPs and hyperscalers are here to stay - QLC or otherwise.

Closing Thought

In the face of demand exceeding supply in an impending memory supercycle, a rising tide lifts all boats. But maybe some more than others.

Samsung and Micron may have their trip-ups when it comes to HBM4; Samsung with their yield issues in HBM4 and Micron with technology choices in the base die, but SK Hynix does presently appear to hold a strong position in HBM. As with previous generations of HBM, there will be a constant struggle to take up market share with major hyperscalers. Given the ASP of HBM and scale of capex expenditures still underway, it will be the predominant factor that determines who comes out ahead in this memory supercycle - something to watch out for.

NAND storage is going to take centerstage because all that video generation needs to be stored somewhere. HDDs are not going away either because we need all the storage we can get. YouTube contains an estimated 50 Exabytes of data (1 Exabyte = 1 million TB) over about 20 years. Imagine what a billion users generating AI video will look like!

Outstanding comprehensiv breakdown of the memory supercycle dynamics. The Intel Optane section is particularly painful to read - they had first-mover advantage on persistent memory right as the AI wave was building and chose to exit. The DDR4 shortage angle is fascinating and underappreciated by most investors. Everyone talks about HBM but the cascading effects on conventional DRAM as fabs retool is creating opportunities across the whole memory stack. Your point about Sandisk's 250% run is well-taken, though I wonder if the QLC adoption curve for nearline storage could accelerate faster than the market expects, especially if video generation really takes off next year.

Great read Vic.. is there still more upside in MU SNDK WDC STK?