🍪 TWiC: Meta+Nvidia, AI Toilet Play, AI EDA SaaSocalypse?

This Week in Chips: Key developments across the semiconductor and adjacent universes.

My deep-dive earlier this week into why CPUs matter for agentic AI was quite widely read and well received. It provides a framework for thinking about agentic workloads and provides nine different metrics to match-up CPUs with the right-sized agentic workloads. Industry contacts I’m in touch with confirmed that the CPU discussions are becoming increasingly common in hyperscalers. Check it out below if you haven’t.

I am working on a follow-up post that provides detailed explanations to the CPU ratings in the above post that should be out next week.

Latest Semi Doped podcast episode:

Meanwhile, here’s what went down This Week in Chips.

Meta Builds AI Infrastructure with NVIDIA

When Jensen said at CES 2026 that the future of AI accelerators involves deep codesign across the stack, it includes working with the ultimate end users of their hardware - aka, hyperscalers like Meta. Their recent collaboration is a step in that direction.

More importantly, regarding Grace and Vera CPU standalone deployments (from Nvidia news):

Meta and NVIDIA are continuing to partner on deploying Arm-based NVIDIA Grace™ CPUs for Meta’s data center production applications, delivering significant performance-per-watt improvements in its data centers as part of Meta’s long-term infrastructure strategy.

The collaboration represents the first large-scale NVIDIA Grace-only deployment, supported by codesign and software optimization investments in CPU ecosystem libraries to improve performance per watt with every generation.

The companies are also collaborating on deploying NVIDIA Vera CPUs, with the potential for large-scale deployment in 2027, further extending Meta’s energy-efficient AI compute footprint and advancing the broader Arm software ecosystem.

The stand-alone deployments of both Grace and Vera CPUs into hyperscalers is a clear indication of the rising demands for CPUs in the age of AI agents. The orchestration workload directly dictates GPU utilization, and eventually TCO. Depending on the workload, they just might be more important than GPUs themselves. The mention of software ecosystem is equally important when deploying CPUs at scale that are intended to work with a variety of tools.

This is a place where x86 has a distinct advantage over ARM-based (off-the-shelf or custom) CPUs; their overall tool support has existed for ages and is simply more mature. Intel and AMD both stand to greatly benefit from the upcoming CPU demand even though the recent NVIDIA+Meta announcement caused AMD/INTC stocks to dip. AMD has some really nice CPUs (Venice Dense) perfectly suited to agentic workloads. While Intel has been on and off about simultaneous multi-threading (SMT) on their recent CPUs which leaves them without a clear edge. With NVIDIA getting in on the CPU game, it’ll be interesting to watch how the ARM-based approach will work out.

How a Japanese Toilet Maker could enter the NAND market

It seems like everywhere you look these days is an “overlooked and undervalued” AI play, which according to one professional investor I spoke to, represents a great time to invest in semiconductors. This one lies exactly where Lebowski took another look: in the toilet.

The latest “AI opportunity" comes from Japanese toilet maker Toto, best known for heated toilet seats and their “Washlet” bidet. The important thing is, you can do more than just “business” by learning that they also manufacture electrostatic chucks that are essentially solid hunks of ceramic that silicon wafers sit on, just like we do.

It’s not uncommon for many Japanese conglomerates to have divergent product lines. Here are a few more fun examples.

Ajinomoto: Ajinomoto, the company that literally invented MSG in 1909, holds over 95% global market share in ABF (Ajinomoto Build-up Film), the insulating film used in virtually every advanced CPU and GPU substrate.

Dai Nippon Printing (DNP): DNP is one of the world’s leading photomask manufacturers for semiconductor lithography. They’re also Japan’s largest printing company, publishing books and magazines since 1876, and they own the Maruzen and Junkudo bookstore chains.

Fujifilm: Fujifilm's semiconductor materials division supplies a variety of photoresists, cleaners, and polyimides to every major chipmaker globally. They also own the Astalift premium skincare line, which is a top-selling anti-aging brand in Japan.

AI EDA, SaaSocalyose, and Moats

It has been a while since I wrote about EDA on this newsletter. But AI-enabled EDA is causing ripples in the industry with a variety of startups addressing the complex workflows of chip design from multiple angles.

Cadence’s (CDNS 0.00%↑) recent earnings call was upbeat with recurring software accelerating to double-digit growth in Q4. Cadence officially announced the release of ChipStack - “world’s first agentic AI solution for automating chip design and verification” - which is a startup they acquired late last year. They reported several chip design companies using their product suite to achieve improved productivity.

The pressing question in the earnings call was whether AI will erode the EDA business, which is something on every analyst’s mind after the recent software selloff. Does AI EDA have a sufficiently strong moat that somehow insures it from becoming victim to what happened in the software industry recently - most widely dubbed the SaaSocalype - which wiped off a trillion dollars from the software markets?

The Cadence CEO, Anirudh Devgan, firmly pushed back on this saying that EDA is engineering software anchored in physics, and AI tools call Cadence’s base tools to get the job done. He emphasized that agentic EDA workflows are a force multiplier that increase tool usage, with no signs from customers about reducing usage. AI for EDA amplifies demand rather than actually replacing its products and is monetized using a three tier strategy:

Subscription revenue for the core product designed around physics continues to exist

Additional revenue from usage-based pricing for incremental AI-driven capacity via tokens

Outcome based packages provide an additional layer that provide turnkey solutions customers will pay to produce desired results quickly

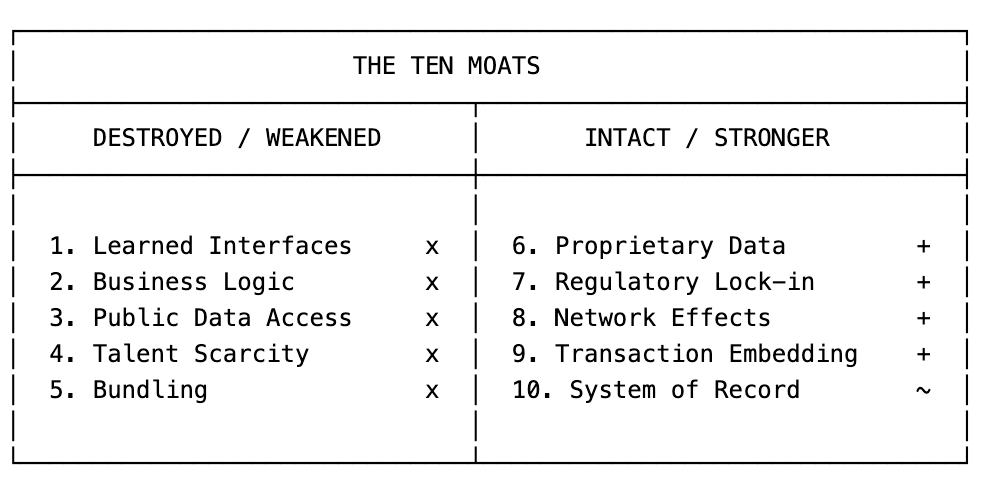

A framework to evaluate the Cadence business model, or just about any other EDA business can be adapted from a recently viral X article that proposes that there are certain kinds of “moats” that are easily encroached by AI, and some that aren’t.

I recommend that you read the whole article, which is quite thought provoking. It suggests that we evaluate any software business, EDA or not, by asking three questions. I have added commentary on its applicability to EDA tools.

Is the data proprietary? — Yes. For EDA companies and the companies using them, the underlying design principles and databases are highly unique and not easily available outside company walls.

Is there regulatory lock-in? — Yes. While there might not be a regulatory body dictating what tool should be used for chip design, the company’s history with designing products with certain tools makes it very difficult to arbitrarily shift tools. They are locked-in.

Is the software embedded in the transaction? — Yes. EDA tools have proprietary simulation tools under the hood that often solves complex circuit equations to produce performance metrics. This is not easily replaceable.

On all these accounts, AI-enabled EDA tools seem safe and not prone to disruption.

Have a great weekend!

If you’re in the mood for more reading, here’s a post from the archives.